System Failure: 7 Shocking Causes and How to Prevent Them

Ever felt like everything just stopped working at once? That’s a system failure in action—silent, sudden, and devastating. From power grids to software networks, when systems collapse, chaos follows. Let’s dive into what really causes them and how we can stop the next big crash before it happens.

What Is a System Failure? Defining the Collapse

A system failure occurs when a network, machine, process, or organization fails to perform its intended function, leading to partial or total breakdown. These failures aren’t limited to technology—they span industries, infrastructures, and even human organizations. Understanding the root definition helps us anticipate, detect, and prevent future disasters.

The Technical Definition of System Failure

In engineering and computer science, a system failure is defined as the inability of a system to deliver its expected outputs due to internal faults, external shocks, or design flaws. According to the ISO/IEC/IEEE 24765:2010 standard, a system failure involves a deviation from specified behavior that results in service disruption.

- Failures can be transient (temporary), intermittent, or permanent.

- They may stem from hardware malfunctions, software bugs, or environmental stressors.

- Detection often relies on monitoring tools, error logs, and redundancy checks.

“A system is only as strong as its weakest component.” — Charles Perrow, Normal Accidents: Living with High-Risk Technologies

Types of System Failures Across Industries

Different sectors experience system failure in unique ways. For example:

- IT Systems: Server crashes, data corruption, or network outages.

- Power Grids: Blackouts caused by overload or cyberattacks.

- Healthcare: Medical device malfunction or EHR (Electronic Health Record) downtime.

- Transportation: Air traffic control errors or train signaling failures.

- Finance: Stock exchange halts or banking transaction errors.

Each industry has specific failure modes, but the underlying principles of detection, mitigation, and recovery remain consistent.

Why System Failure Matters in Modern Society

As our world becomes increasingly interconnected, the impact of a single system failure can ripple across continents. The 2003 Northeast Blackout affected 55 million people across the U.S. and Canada due to a software bug and poor monitoring—costing an estimated $6 billion. This shows how deeply dependent we are on complex, interdependent systems.

Modern life runs on automation, cloud computing, and real-time data. When one node fails, others often follow in a domino effect. This makes understanding system failure not just a technical issue, but a societal imperative.

Common Causes of System Failure

Behind every system failure lies a chain of causes—some obvious, others hidden in plain sight. Identifying these root causes is the first step toward building resilient systems.

Hardware Malfunctions and Component Wear

Physical components degrade over time. Hard drives fail, circuit boards overheat, and sensors drift out of calibration. In data centers, hardware failure accounts for nearly 20% of unplanned outages, according to a Uptime Institute report.

- Common culprits: power supply units, cooling fans, memory modules.

- Environmental factors like dust, humidity, and temperature accelerate wear.

- Predictive maintenance using AI can reduce hardware-related failures by up to 40%.

Software Bugs and Coding Errors

Even the most rigorously tested software contains bugs. A single line of faulty code can trigger a system failure. The 1996 Ariane 5 rocket explosion was caused by a 64-bit to 16-bit integer conversion error—costing $370 million.

- Common software failure types: buffer overflows, race conditions, null pointer exceptions.

- Agile development and CI/CD pipelines help catch bugs early—but don’t eliminate risk.

- Open-source software, while powerful, introduces third-party vulnerabilities if not audited.

“One of the main causes of system failure is the assumption that software is infallible.” — Nancy Leveson, Engineering Safe and Secure Systems

Human Error and Operational Mistakes

People are often the weakest link. Misconfigured servers, accidental deletions, or incorrect command inputs can bring down entire systems. In 2017, an Amazon S3 outage was triggered by an engineer typing a wrong command during routine debugging.

- Human error accounts for over 20% of data center outages.

- Stress, fatigue, and lack of training increase the likelihood of mistakes.

- Implementing strict access controls and change management protocols reduces risk.

System Failure in Critical Infrastructure

When critical infrastructure fails, lives are at stake. Power, water, transportation, and communication systems must operate with near-perfect reliability. Yet, they remain vulnerable.

Power Grid Failures and Blackouts

Power grids are complex networks balancing supply and demand in real time. A failure in one substation can cascade across regions. The 2019 Venezuela blackout, which lasted days, was attributed to both technical failure and poor maintenance.

- Cascading failures occur when one component’s failure overloads others.

- Smart grids use sensors and automation to detect and isolate faults faster.

- Renewable energy integration adds complexity due to variable output.

For more on grid resilience, see the U.S. Department of Energy’s Smart Grid Initiative.

Water Supply System Failures

Contaminated water, pipe bursts, or pump failures can disrupt entire cities. In 2014, Flint, Michigan’s water crisis was a systemic failure involving poor decision-making, corrosion control, and regulatory oversight.

- Aging infrastructure is a major risk—many U.S. water pipes are over 100 years old.

- SCADA (Supervisory Control and Data Acquisition) systems monitor water quality and flow.

- Cyberattacks on water treatment plants have increased, as seen in the 2021 Oldsmar, Florida incident.

Transportation Network Disruptions

From air traffic control to railway signaling, transportation systems rely on precise coordination. A system failure here can lead to delays, accidents, or fatalities.

- In 2023, a software glitch grounded thousands of flights in the U.S. via the FAA’s Notice to Air Missions (NOTAM) system.

- Autonomous vehicles depend on sensor fusion and AI—both prone to failure in edge cases.

- Redundancy and fail-safe mechanisms are critical in aviation and rail systems.

Cybersecurity and System Failure

In the digital age, cyberattacks are a leading cause of system failure. Malware, ransomware, and DDoS attacks can cripple organizations in minutes.

Ransomware Attacks and Data Lockouts

Ransomware encrypts critical data, demanding payment for decryption. The 2021 Colonial Pipeline attack forced a shutdown of fuel supply across the U.S. East Coast.

- Attackers often exploit unpatched software or phishing vulnerabilities.

- Backups and air-gapped systems are essential for recovery.

- The average cost of a ransomware attack exceeds $5 million, including downtime and recovery.

Learn more about ransomware trends from CISA’s StopRansomware initiative.

DDoS Attacks and Service Overloads

Distributed Denial of Service (DDoS) attacks flood systems with traffic, overwhelming servers. In 2016, the Mirai botnet took down major websites like Twitter and Netflix.

- IoT devices with weak security are often hijacked to form botnets.

- Mitigation strategies include traffic filtering, rate limiting, and cloud-based protection.

- DDoS attacks are increasing in size and frequency—some exceed 1 Tbps.

Insider Threats and Privilege Abuse

Not all threats come from outside. Employees or contractors with access can intentionally or accidentally cause system failure.

- Examples: data exfiltration, sabotage, or misconfiguration.

- Zero-trust architecture limits access based on need-to-know principles.

- User behavior analytics (UBA) can detect anomalous activity before damage occurs.

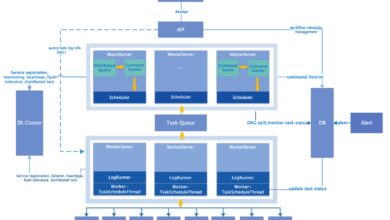

System Failure in Software and IT Networks

Software systems are especially prone to failure due to complexity, rapid deployment cycles, and dependency chains.

Cloud Outages and Service Downtime

Even giants like AWS, Google Cloud, and Azure suffer outages. In 2021, an AWS outage disrupted services like Slack, Airbnb, and Netflix.

- Causes include configuration errors, network partitioning, and power issues.

- Multi-region and multi-cloud strategies improve resilience.

- Service Level Agreements (SLAs) offer compensation but don’t prevent downtime.

Check real-time status on Downdetector to see ongoing outages.

Database Corruption and Data Loss

Databases are the backbone of modern applications. Corruption can occur due to hardware failure, software bugs, or improper shutdowns.

- Common signs: inconsistent records, missing tables, query failures.

- Regular backups, replication, and checksum validation are preventive measures.

- Point-in-time recovery allows rollback to a stable state before failure.

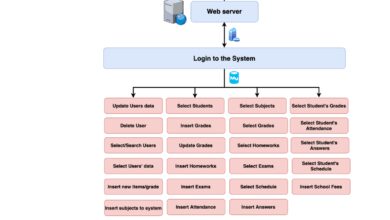

API Failures and Integration Breakdowns

Modern apps rely on APIs to communicate. When an API fails, dependent services collapse. In 2020, a Facebook API outage broke login systems for thousands of third-party apps.

- Causes: rate limiting, version incompatibility, server overload.

- Best practices: implement circuit breakers, retries with backoff, and fallback responses.

- Monitoring API health with tools like Postman or Datadog is crucial.

The Role of Design Flaws in System Failure

Sometimes, failure is baked into the system from the start. Poor architecture, lack of redundancy, or over-complexity can doom a system before it even launches.

Lack of Redundancy and Single Points of Failure

A single point of failure (SPOF) is a component whose failure brings down the entire system. Redundancy—having backup components—eliminates SPOFs.

- Examples: dual power supplies, mirrored databases, load-balanced servers.

- High Availability (HA) systems use redundancy to achieve 99.999% uptime (“five nines”).

- Cost vs. reliability trade-offs must be carefully evaluated.

Over-Complexity and System Entropy

As systems grow, they become harder to manage. This “entropy” increases the likelihood of failure. The 2012 Knight Capital trading glitch, which lost $440 million in 45 minutes, was caused by legacy code interacting unpredictably with new software.

- Technical debt accumulates when quick fixes replace proper design.

- Microservices can reduce complexity but introduce new failure modes.

- Regular refactoring and documentation help manage system entropy.

Poor Error Handling and Recovery Mechanisms

Even when failures occur, good systems recover gracefully. Poor error handling leads to crashes instead of alerts or fallbacks.

- Examples: uncaught exceptions, infinite loops, memory leaks.

- Implement logging, alerting, and automated rollback procedures.

- Chaos engineering—like Netflix’s Chaos Monkey—tests resilience by injecting failures.

Case Studies of Major System Failures

History is filled with cautionary tales of system failure. Studying them helps us avoid repeating the same mistakes.

The 2003 Northeast Blackout

On August 14, 2003, a software bug in Ohio prevented operators from seeing grid overloads. Within minutes, cascading failures blacked out eight U.S. states and parts of Canada.

- Root cause: failure in FirstEnergy’s alarm system and poor situational awareness.

- Impact: 55 million people without power, $6 billion in losses.

- Aftermath: New NERC reliability standards were implemented.

Read the full report at NERC’s official investigation.

The Therac-25 Radiation Therapy Machine

Between 1985 and 1987, the Therac-25 delivered lethal radiation doses to patients due to a race condition in its software.

- Design flaw: no hardware interlocks; relied solely on software safety.

- Result: at least six patients severely injured, several died.

- Legacy: became a case study in software safety and medical device regulation.

“The Therac-25 accidents were not due to a single error, but a cascade of poor decisions.” — Nancy Leveson

The Boeing 737 MAX MCAS Failure

The Maneuvering Characteristics Augmentation System (MCAS) relied on a single sensor. When it failed, it repeatedly pushed the plane’s nose down, leading to two crashes and 346 deaths.

- Root cause: over-reliance on one sensor, inadequate pilot training, and poor oversight.

- System failure compounded by organizational culture and certification lapses.

- Aftermath: global grounding, redesign, and regulatory overhaul.

For details, see the U.S. Senate Committee report on Boeing.

How to Prevent System Failure

While we can’t eliminate all risks, we can dramatically reduce the likelihood and impact of system failure through proactive strategies.

Implementing Redundancy and Failover Systems

Redundancy ensures that if one component fails, another takes over seamlessly. This is standard in aviation, data centers, and critical infrastructure.

- Examples: RAID arrays, clustered servers, dual internet links.

- Failover mechanisms must be tested regularly to ensure reliability.

- Geographic redundancy protects against regional disasters.

Regular Maintenance and System Audits

Preventive maintenance catches issues before they become failures. This includes software updates, hardware inspections, and security audits.

- Schedule patching cycles to minimize downtime.

- Use automated monitoring tools like Nagios or Zabbix.

- Conduct penetration testing and vulnerability scans annually.

Adopting Chaos Engineering and Resilience Testing

Chaos engineering involves deliberately introducing failures to test system resilience. Netflix pioneered this with Chaos Monkey, which randomly terminates production instances.

- Principles: start small, automate experiments, minimize blast radius.

- Tools: Gremlin, Chaos Toolkit, AWS Fault Injection Simulator.

- Benefits: uncover hidden weaknesses, improve incident response.

The Human Factor in System Failure

Despite automation, humans remain central to system design, operation, and recovery. Ignoring the human element is a recipe for failure.

Training and Skill Development

Well-trained staff can prevent, detect, and respond to system failure. Regular drills and simulations build muscle memory for crisis response.

- Conduct tabletop exercises for disaster scenarios.

- Invest in certifications like ITIL, CISSP, or PMP.

- Encourage cross-training to avoid knowledge silos.

Organizational Culture and Blame-Free Reporting

A culture of fear discourages reporting near-misses. High-reliability organizations (HROs) promote psychological safety.

- Adopt blame-free post-mortems to learn from failures.

- Encourage open communication and transparency.

- Leadership must model accountability without punishment.

“The goal is not to avoid failure, but to learn from it.” — Sidney Dekker, The Safety Anarchist

Decision-Making Under Pressure

During a system failure, time is short and stress is high. Poor decisions can worsen the situation.

- Use incident command systems (ICS) to structure response.

- Predefine escalation paths and communication protocols.

- Leverage runbooks and checklists to guide actions.

Emerging Technologies and Future Risks

As technology evolves, so do the risks of system failure. AI, quantum computing, and IoT introduce new vulnerabilities.

AI and Machine Learning System Failures

AI systems can fail due to biased training data, adversarial attacks, or unexpected inputs.

- Example: self-driving cars misclassifying objects in low-light conditions.

- Mitigation: robust testing, explainability, and human oversight.

- AI “hallucinations” in generative models can lead to incorrect decisions.

IoT Device Vulnerabilities

Billions of connected devices lack basic security, making them easy targets for botnets and attacks.

- Many devices ship with default passwords and unpatched firmware.

- Zero-day exploits in IoT can trigger large-scale system failures.

- Regulations like the U.S. IoT Cybersecurity Improvement Act aim to raise standards.

Quantum Computing Threats to Encryption

Future quantum computers could break current encryption (RSA, ECC), rendering secure communications vulnerable.

- Post-quantum cryptography is being developed to counter this.

- NIST is standardizing quantum-resistant algorithms.

- Organizations should begin planning for cryptographic transitions.

What is the most common cause of system failure?

The most common cause of system failure is human error, followed closely by software bugs and hardware malfunctions. According to industry reports, misconfigurations, accidental deletions, and poor change management are leading contributors to unplanned outages.

How can organizations prevent system failure?

Organizations can prevent system failure by implementing redundancy, conducting regular maintenance, adopting chaos engineering, training staff, and fostering a blame-free culture. Proactive monitoring and incident response planning are also critical.

What is a cascading system failure?

A cascading system failure occurs when the failure of one component triggers the failure of subsequent components, leading to a widespread collapse. This is common in power grids and networked systems where load redistribution overwhelms remaining nodes.

Can AI prevent system failure?

Yes, AI can help prevent system failure by predicting hardware failures, detecting anomalies in network traffic, and automating responses. However, AI systems themselves can fail due to data bias or adversarial inputs, so they must be carefully designed and monitored.

What was the biggest system failure in history?

One of the biggest system failures was the 2003 Northeast Blackout, affecting 55 million people. Other notable failures include the Therac-25 radiation overdoses and the Boeing 737 MAX crashes, both rooted in systemic design and oversight failures.

System failure is not just a technical glitch—it’s a complex interplay of technology, design, human behavior, and organizational culture.From power grids to software networks, the consequences can be catastrophic.But by understanding the root causes—hardware decay, software bugs, cyberattacks, design flaws, and human error—we can build more resilient systems.The lessons from past failures, like the Northeast Blackout or the Therac-25 tragedy, remind us that prevention is always better than recovery..

Through redundancy, proactive maintenance, chaos engineering, and a culture of learning, organizations can reduce risk and respond effectively when failures do occur.As technology evolves, new threats like AI instability and quantum computing vulnerabilities will emerge.Staying ahead requires constant vigilance, investment in safety, and a commitment to learning from every breakdown.The goal isn’t to achieve perfection—but to build systems that fail safely, recover quickly, and make us wiser with every incident..

Further Reading: